Hi to all.

We used table adapters to create DAL (Data Access Layer) in our last project so I decided to right a blog on their advantages and disadvantages. We were using SQL Server 2005 as RDMS. We had different choices for DAL but our lead decided to go for table adapters. Although it was looking silly at the start to work with them but at the end we find table adapters, a robust and modern approch for creating DAL.(i know there are lot of modern DAL generators available) The beauty of Table Adapters was in their one click re-configuration flexibility. Basically Table adapter is automatically created when you add a new typed Dataset in your project. Each table adapter is associated with a dataset and performs DB operations only on that dataset.With all their beauties Table Adapters also have some ugly thing. I hope these thing will be fix in next release of .net

I will explain you how you can use table adapters to generate DAL for your project. So here is how you will go. Open VS 2005 and create a new website with language C#. I named it as "Table_Adapter_Test_Site".

In website solution add a new project of type "Class Library" and named it as "blog.DAL".

Add a new Dataset in bog.DAL project and named it as "CORE_PROVINCE.xsd".

After adding the Dataset you will see a blue color blank, screen something like this.

It indicates that currently there is no data table in this typed dataset.

To add a data table in this typed dataset you need a connection with the database. If server explorer window is not open then open it from View>Server Explorer

To add a data table in this typed dataset you need a connection with the database. If server explorer window is not open then open it from View>Server Explorer

In server explorer right click the "Data Connections" and click on "Add Connection..." tab. you will Add Connection screen like this. Provide data source, server name, credentials, DB name to connect to your database (MS SQL). Click on "Test Connection" button to verify that everything is ok till now.

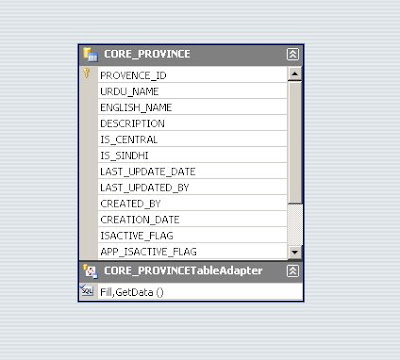

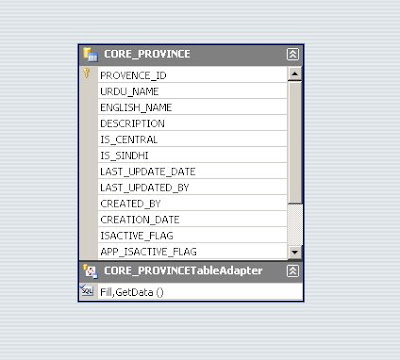

You will see your newly added connection in the Data Connection list of Server Explorer. Expand Tables and drop any table on the Dataset. I dropped Province Table (states of Pakistan) on my dataset.

Note that a table adapter is automatically created with this data table and is attached in the bottom of the table. The newly generated table adapter (CORE_ProvinceTableAdapter) has two default Query based methods, Fill and GetData and both are used to SELECT data from data base.

Note that a table adapter is automatically created with this data table and is attached in the bottom of the table. The newly generated table adapter (CORE_ProvinceTableAdapter) has two default Query based methods, Fill and GetData and both are used to SELECT data from data base.

At this stage you can directly use this table adapter to perform CRUD operations but to see what is inside running in this table adapter you can right click on the table adapter and click on "Configure..." tab.

You will see Select Statement for CORE_PROVINCE table. You can change the select statement and if required you can also use the Query Builder to generate complex (joined) select statements.

After clicking the next button you can change the names of the default select function of this table adapter. On the same window you can also see that table adapter also has generated direct method for CRUD operation on this DB table. Click next and then Finsh.

Rebuild the blog.DAL project, DAL for CORE_PROVINCE table is ready. Now you can explore methods which Typed Data Set provides for you. But i will more concentrate on user define DAL functions. Now consider if a user wants to add a complex update statement which involves CRUD operations on many DB tables as a single DB operation, he can add this functionality in some clicks. For example I have a update stored procedure defined in my Database and I want o use this procedure with this table adapter. Note that you can have much complex stored procedure and of any type. I created a stored proceduren amed as Update_Province_By_English_Name. This sp takes single parameter and returns number of rows affected. The sp is something like this.

Now to use this sp as a function of table adapter right click on table adapter and Click on "Add Query" tab.

On this window select "No Value" option and click next.

On the next window, select "Use existing Stored Procedure" and click next.

On the next window, select "Use existing Stored Procedure" and click next.

You will see the list of all stored procedures defined in the database. Select the sp that you want to use.

Provide the name of function which will execute this procedure. By default it is the same as of sp name.

Now u can see your newly added function in you table adapter.

Now u can see your newly added function in you table adapter.

Using this function is a piece of a cake.

Using this function is a piece of a cake.

Note that each table adapter created a new namespace so to use any table adapter, you have to add its namespace in your code file. You can expand the image below to get the idea of how to use this function.

Now we will discuss disadvantages of Table Adapters.

Now we will discuss disadvantages of Table Adapters.

1. The major drawback of table adapter is that it stores Database name as a prefix in the XML definition of each function you added in it and also with the default Fill and GetData functions.

The problem occurs when you create a project using one database and eventually you need to change its name Now when u change the database name and try table adapter to execute that function you will get error.

To Solve this problem you can remove the database name prefix from the XML file of dataset (XSD). To do so Find "DBObjectName" in the DAL project.

You will see all of your table adapter's default and user defined functions have Database name as the prefix in their names. Remove all DB name prefixes from DBObjectName attributes and build your project again.

2. The second disadvantage of Table Adapters is that each table adapter uses a different connection object so if you want to combine two or more table adapter functions in a single Transaction scope (.net Transactions) then either you have to start DTC service (Distributed transaction coordinator) on your MS SQL Server or to follow the following work around.

In order to solve this problem without starting the DTC service, you have to write a function in all of your table adapter classes to assign a single connection object to all of their Commands.

To do so add a new partial class file (.cs) in your dataset (.xsd) and write the following code

namespace Kalsoft.ECP.CERS.DataAccess.CORE_PROVINCETableAdapters

{

public partial class CORE_PROVINCETableAdapter

{

public void AssignConnection(DbConnection sameCon)

{

if (sameCon != null)

{

SqlConnection sqlConn = sameCon as SqlConnection;

this._connection = sqlConn;

if ((this.Adapter.InsertCommand != null))

{

this.Adapter.InsertCommand.Connection = sqlConn;

}

if ((this.Adapter.DeleteCommand != null))

{

this.Adapter.DeleteCommand.Connection = sqlConn;

}

if ((this.Adapter.UpdateCommand != null))

{

this.Adapter.UpdateCommand.Connection = sqlConn;

}

for (int i = 0; (i < i =" (i" connection =" sqlConn;">

This method will assign the provided connection object to all commands present in this table adapter. Before calling the DB operation of any table adapter in a particular Transaction scope you need to call AssignConnection method.

3. The third and the last problem with the table adapters is that if you change the default schema of their CRUD operations, it does a very silly thing with DateTime ISNULL parameters of its update procedure.

Consider you have a DateTime parameter named as "UpdateDate" and this field is also nullable. Eventually you need to change the schema of delete procedure mean you have your own delete procedure and you want to execute that delete procedure when a row deletes from the data table. You can do it by right click the configure and assign required procedure to call upon when adapters update function call on a deleted mark row.

Everything will work fine but if you change any rows values and try to call update you will see an error that

"Failed to Convert Int32 from DataTime"

what happened ??

Actually table adapter marked IsUpdateDateNull parameter of its update procedure as DateTime but in actual, it expects int32.

To solve this problem, select table adapter > open properties window > click update Command > Open Parameter Collection > Change IsUpdateDateNull field type as int32. Rebuild the DAL and try again. It will surely fix this problem.

To add a data table in this typed dataset you need a connection with the database. If server explorer window is not open then open it from View>Server Explorer

To add a data table in this typed dataset you need a connection with the database. If server explorer window is not open then open it from View>Server Explorer

Note that a table adapter is automatically created with this data table and is attached in the bottom of the table. The newly generated table adapter (CORE_ProvinceTableAdapter) has two default Query based methods, Fill and GetData and both are used to SELECT data from data base.

Note that a table adapter is automatically created with this data table and is attached in the bottom of the table. The newly generated table adapter (CORE_ProvinceTableAdapter) has two default Query based methods, Fill and GetData and both are used to SELECT data from data base.